Modern films and TV shows are filled with spectacular computer-generated sequences. Today, those scenes are typically computed (rendered) with a Monte Carlo path-tracer, a program that simulates the paths light follows through a virtual three-dimensional (3D) scene, to produce two-dimensional (2D) image frames for the final film. To achieve accurate colors, shadows, and lighting, however, millions of light paths must be computed, which can take a lot of time, up to a week to compute a single frame. It's possible to render images in only a few minutes using just a few light paths, but doing so causes inaccuracies that show up as objectionable “noise” in the final 2D image.

In 2008, Pradeep Sen, now associate professor in the Electrical and Computer Engineering Department at the UCSB College of Engineering (CoE), saw the problem first-hand while spending a portion of the summer at Sony Pictures Imageworks (SPI). SPI was one of the first studios to try using Monte Carlo path-tracers for film-production, initially on Monster House, and again for Cloudy with a Chance of Meatballs. Sometimes, the Monte Carlo noise was so overwhelming that it was difficult to see what was going on in the scene.

Sen began to think of solutions to the problem. Although there had been more than two decades of research on ways to reduce this noise, previous approaches were either impractical or ineffective. Sen was particularly intrigued by the idea of applying a filter to the final image to clean up the Monte Carlo noise, a process now known as Monte Carlo (MC) denoising. Although MC denoising was introduced in the early 1990s, the results were so unsatisfactory that the entire area of research was essentially dropped.

Sen knew that if he could build a filter to remove most of the MC noise from an image rendered in just a few minutes, to produce a high-quality image that looked as if it had been rendered over many days, it would be a game-changer in feature-film production. But when he asked people at SPI and top researchers elsewhere about the potential of MC denoising, everyone said that it would never work, for many reasons. The biggest concern was that, in trying to remove the noise, the filter would blur desired image detail. For high-quality film production, this was unacceptable.

Undeterred, Sen returned to his lab at the University of New Mexico, where he was working, and, with graduate student Soheil Darabi, soon developed the two key ideas that would form the foundation of every high-quality MC denoiser that followed: 1) Rather than using only the final noisy pixel colors for denoising, which makes it hard to distinguish between actual noise and detail, a lot of additional information that was calculated during rendering could be leveraged to improve denoising and 2) The filter would need to be adjusted and this information processed differently for each pixel in order to remove the different kinds of noise that can occur in complex scenes.

Based on these ideas, Sen and Darabi spent the next two years developing what they called Random Parameter Filtering (RPF), the first high-quality MC denoiser. When the findings were made public in 2011, they generated a tremendous amount of interest. “People were excited,” Sen recalls.

“MC denoising was not something anyone was really studying, so this came out of left field and caught many by surprise.”

Suddenly, MC denoising became a hot area of research, and researchers around the country began working on MC denoising systems. Sen, by then at UCSB, and two PhD students, Nima Khademi Kalantari and Steve Bako, began work on the next-generation MC denoiser. Their idea was to use machine learning, which had been used to address standard image denoising. But could it be used for Monte Carlo denoising?

Initially, the team considered training an end-to-end system to remove MC noise from a rendered image automatically. But that would have required a lot of training data—in this case 3-D scenes—which they did not have. So they simplified the problem by using the filter from the RPF system, replacing the statistical calculations with a new machine learning model to drive it. The resulting algorithm, called Learning-Based Filtering (LBF), offered a big improvement in denoising quality and was published at SIGGRAPH 2015. It caught the attention of Disney’s Pixar Animation Studios, which soon hired Bako as an intern to work on MC denoising.

“Steve was one of the main architects of the LBF system, which was state-of-the-art at the time,” Sen says. “That made him was one of the world’s leading experts on implementing high-quality MC denoising systems. It’s no wonder Pixar wanted to hire him.”

Together, UCSB and Disney/Pixar built upon the LBF system to develop a deep-learning system that would take in noisy rendered images and directly compute high-quality noise-free images suitable for feature-film production. To train the system, they used millions of examples from the Pixar film Finding Dory, and then tested it on scenes from Cars3 and Coco. Their MC denoiser was able to produce high-quality results that were comparable to the final frames in the films, and a paper on that work was published at SIGGRAPH 2017.

An image divided into two sections showing before (top left) and after Monte Carlo noise reduction.

Suddenly, MC denoising became a hot area of research, and people around the country began working on systems. Sen, by then at UCSB, and two PhD students, Nima Khademi Kalantari and Steve Bako, began working on the next-generation MC denoiser. Their idea was to use machine learning, which had been used to address standard image denoising. But could it be used for Monte Carlo denoising?

Initially, the team considered training an end-to-end system to remove MC noise from a rendered image automatically. But that would have required a lot of training data — in this case 3-D scenes — which they did not have. So they simplified the problem by using the filter from the RPF system and replacing the statistical calculations they had used to adjust the RPF filter with a new machine- learning model. The resulting algorithm, called Learning-Based Filtering (LBF), offered a big improvement in denoising quality and was published at SIGGRAPH 2015, the leading digital graphics conference. It caught the attention of Disney’s Pixar Animation Studios, which hired Bako as an intern.

“Steve was one of the main architects of the LBF system, which was state-of-the-art at the time,” Sen says. “That made him was one of the world’s leading experts on implementing high-quality MC denoising systems. It’s no wonder Pixar wanted to hire him.”

Together, UCSB and Disney/Pixar built upon the LBF system to develop a deep-learning system that would take in noisy rendered images and directly compute high-quality noise-free images suitable for feature-film production. To train the system, they used millions of examples from the Pixar film Finding Dory, and then tested it on scenes from Cars3 and Coco. Their MC denoiser was able to produce high-quality results that were comparable to the final frames in the films, and a paper on that work was published at SIGGRAPH 2017.

Thanks to the work of Sen and his team, MC denoising is now a well-established technique for dramatically reducing Monte Carlo rendering times. The graphics hardware giant NVIDIA recently released an MC denoiser for their interactive Iray path-tracer, and the startup company Innobright was established to develop and market high-end MC denoising algorithms. Most feature-film studios and effects houses have migrated to using path-traced rendering, coupled with MC denoising to reduce render times. In fact, Monte Carlo denoising has been recently credited as one of the two main reasons that Monte Carlo path-tracing has become ubiquitous in feature-film production.

But Sen isn’t satisfied.

“There is still a lot more progress we can make, particularly when dealing with renderings that have very few samples per pixel,” he says. “Improving results in that domain would drastically impact real-time rendering, which is used in video games,” thus improving the interactive experience. Nearly ten years on, Sen continues to turn down the volume on Monte Carlo noise.

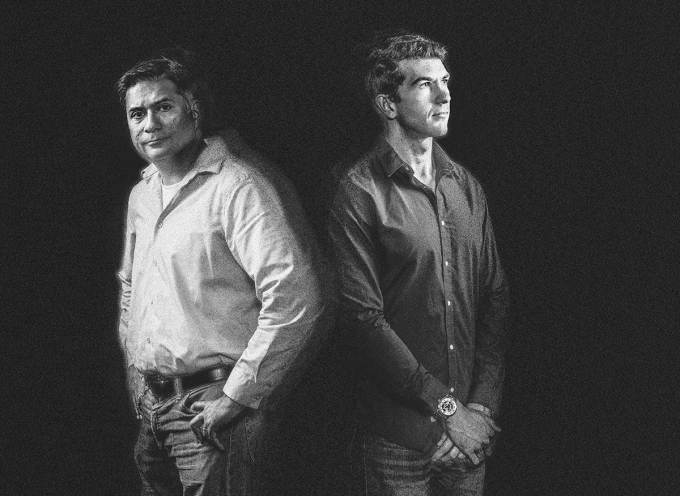

Prof. Pradeep Sen and PhD student Steve Bako have worked to reduce the kind of noise that diminishes this image.